Supercharge your SOC with Attack Discovery

Elastic enables everyone to find the answers that matter. From all data. In real time. At scale. Elastic — The Search AI Company

Three solutions built on The Elastic Search AI Platform

Get relevant results at unprecedented speed with open and flexible enterprise solutions — powered by The Elastic Search AI Platform. Minimize downtime. Accelerate root cause analysis. Respond to threats at scale.

Observability

Accelerate problem resolution with open, flexible and unified observability powered by advanced ML and analytics.

Security

Automate protection, investigation, and response at scale using a unified solution with SIEM, EDR, and cloud security.

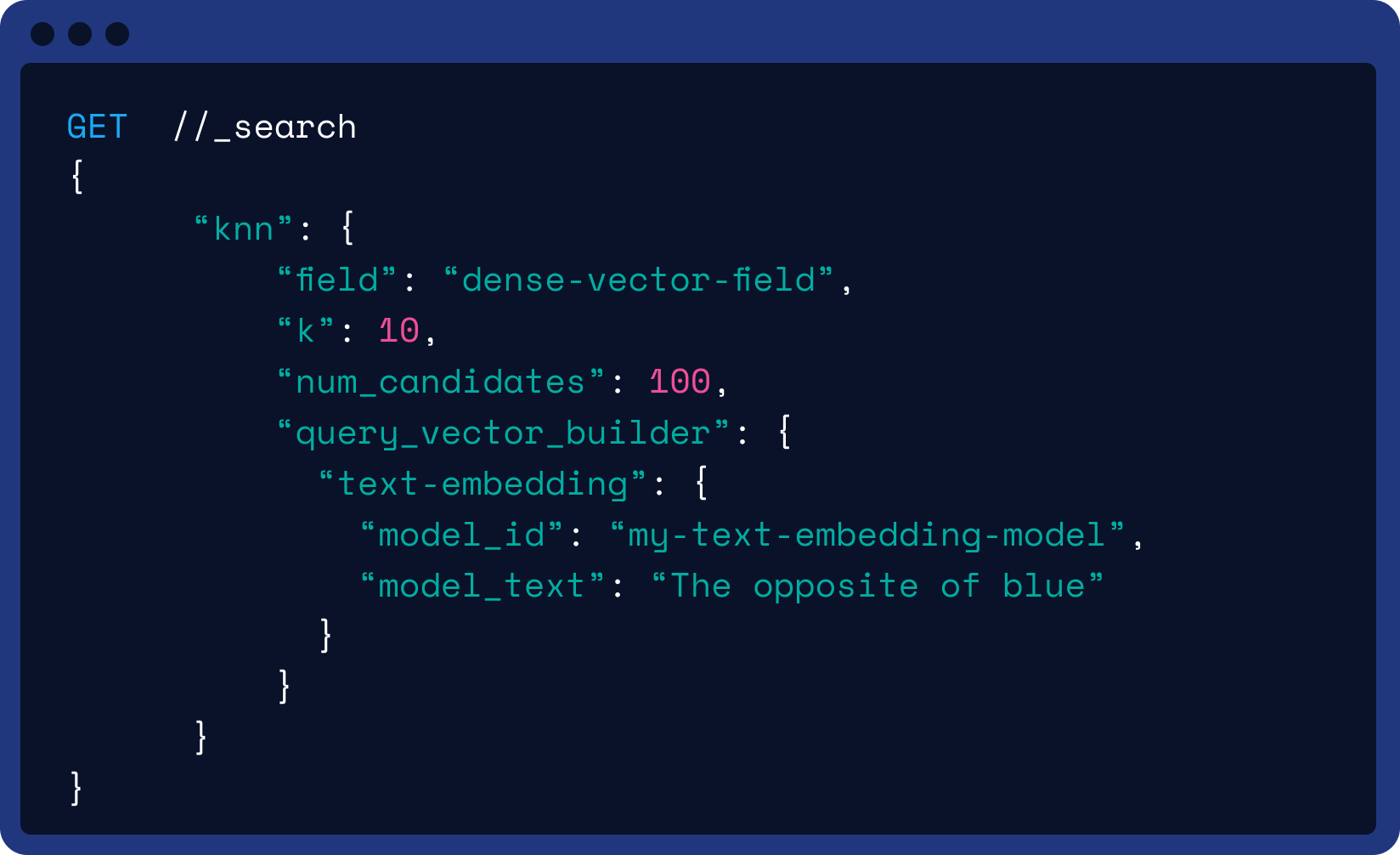

Search

Build powerful AI and machine learning enabled search applications for your customers and employees.

Build with the world's most used vector database — Elasticsearch

Discover everything you can do with Elastic

Stay up to date on all the latest from Elastic

Expand your expertise

Keep up with our growing community